Though you may be tired of the artificial intelligence hype and increasingly prevalent AI features, avoiding it – and protecting your data from it – is increasingly difficult, experts say.

Robert Wahl, an associate professor of computer science at Concordia University Wisconsin, said AI is often foisted on people and that the difficulty in avoiding iterations of AI runs contrary to recent polling that suggests apprehension about the technology.

“It may be that we will always have a percentage of consumers that either do not want artificial intelligence information, or at a minimum want to be given the choice on whether they receive artificial intelligence information,” he said. Prof Wahl pointed out that it is often incredibly difficult and in some cases impossible to opt out of AI features, as more and more companies try to secure their future through the technology.

“Many companies want to include AI features because of all the attention that AI is currently getting,” he said. “The perception may be that a reference to artificial intelligence on a website may lead to increased sales and help drive revenue.”

He added that he recently tried to turn off Google's much-vaunted “AI Overviews” feature, which provides users with summarised, AI-generated answers to various questions near the top of their search results. “I wasn't able to locate an easy way to opt out,” he said.

According to Google, although there are some ways for developers to minimise “AI Overviews” in some areas, there is no way for the average user to turn off the feature. “AI Overviews are part of Google Search like other features, such as knowledge panels, and can't be turned off,” a question and answer page provided by Google explains.

Prof Wahl also said figuring out how to turn off AI features on various tools, websites and apps can be difficult – and that's no accident. Companies want the AI tools to be used, tested and improved quickly, he said, and the fear of losing the competitive edge is turning consumer choice into an afterthought.

“It seems that the fairest way to proceed with the roll-out of artificial intelligence is to give consumers the ability to 'opt in' rather than having to 'opt out' from every application or website that uses it,” he said, noting that so far, those options have been the exception rather than the rule.

Opting out of AI data training: what doesn't work

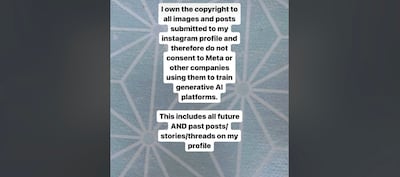

Another issue is the use of social media content being used to train AI large language models. If you use Instagram or Facebook for any length of time, it is almost inevitable that you will come across a post purporting to forbid Meta – parent company of Instagram and Facebook – from using the data to train its AI products.

“I own the copyright to all images and posts submitted to my Instagram profile and therefore do not consent to Meta or other companies using them to train AI platforms,” the posts often read. Most technology and legal experts say the many versions of this slide being used cannot stop your content from being used to train AI.

Making accounts private through the app settings can give some protection. Often, the fine print of user agreements gives platform owners a lot of flexibility in terms of how content and data are used.

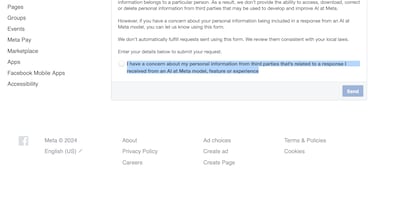

Prof Wahl points out that US laws are generally weaker than EU laws in the technology and data space. As a result, it is much easier to find settings to opt out of use of content for AI training on sites in European countries. In some instances, users were sent a notification with an option to opt out. For Instagram and Facebook in the US, however, no such option exists.

“If you have a concern about your personal information being included in a response from an AI at Meta model, you can let us know using this form,” reads a section of Facebook's privacy help centre for US users. “We don’t automatically fulfil requests sent using this form. We review them consistent with your local laws.”

Prof Wahl said that currently, and especially in the US, users do not have much leverage when it comes to getting options to opt out of AI. Time and user annoyance, he explained, may eventually change that.

“What seems to happen typically with emerging technology is that it is initially unregulated until enough problems are observed, and then governments step in and put regulations in place to protect consumers,” he said. For now, however, he said there is a tremendous burden on users to try to figure out difficult and often buried AI privacy settings or opt-out forms – with no guarantee of success.