The US conglomerate Intel has formally launched its new generative artificial intelligence processor, putting it in direct competition with Nvidia and other chip manufacturers on the front lines of the latest battleground in technology.

The Gaudi 3 chip – first teased at Intel's AI Everywhere event in December – was unveiled at the company's Vision 2024 conference in Phoenix on Tuesday, and is being positioned as critical to Intel's future road map, defined by an AI-for-all strategy.

“What aspect of your life … is becoming more digital? Everything – and everything digital runs on semiconductors,” Pat Gelsinger, chief executive of Intel, said in his keynote address. That means the work of AI chip companies is increasingly important to the world, companies and families, he added.

“We're just seeing this accelerate as this era of AI is driving this huge momentum.”

California-based Intel's plans are also in line with the US Chips Act, the landmark 2022 legislation that provides nearly $53 billion for research and development in America's semiconductor sector to boost its competitiveness.

The act is “the most important industrial policy since World War Two”, Mr Gelsinger said.

What is Gaudi 3?

Intel's Gaudi 3 is an AI accelerator that can power AI systems “with up to tens of thousands of accelerators” with a 50 per cent boost in memory bandwidth over its predecessor, 2023's Gaudi 2.

This, Intel says, would deliver a “significant leap” in AI training and inference for global enterprises looking to deploy generative AI at scale.

The chip was designed to be an open and community-based software, allowing enterprises to “scale flexibly” even at the largest scale.

How does the Gaudi 3 stack up against Nvidia's chips?

At the time of its launch in 2022, Intel claimed that the Gaudi 2 was the “only” benchmarked alternative to Nvidia's Hopper H100 in terms of performance.

The Gaudi 3 is now the direct challenger to the Hopper, with Intel claiming it will be able to train certain AI models about 1.7 times faster and 1.5 times better at running software, aside from being more power-efficient.

Compared to the Hopper H200, Intel said the Gaudi 3 is practically at par.

The Gaudi 3 is also expected to deliver 50 per cent faster time to train on average across certain Meta Platforms' Llama2 and OpenAI's GPT-3 parameter models.

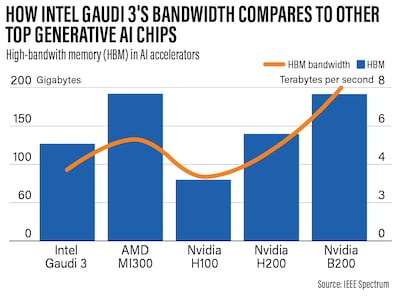

In terms of high-bandwidth memory, Gaudi 3 has more HBM than Nvidia's H100, but less than the H200, the latest B200 and AMD's MI300, according to data from IEEE Spectrum, the publication of the Institute of Electrical and Electronics Engineers.

HBM, introduced in 2015, is a computer memory interface developed with lower power consumption and specifically designed for high-performance applications and faster data speeds.

In addition, Gaudi 3's inference throughput – a measurement for speed and efficiency in machine learning – is said to outperform the H100 by 50 per cent on average across certain parameters from Llama and Falcon, which was developed by Abu Dhabi's Technology Innovation Institute.

How much is the Gaudi 3 and when will it be released?

Here's another interesting bit: Intel did not provide any pricing guidance for the Gaudi 3. But there's context to this.

The Gaudi 3 is primarily aimed at Nvidia's Hopper chips and Intel did not make any comparisons to Nvidia's newest chip, the Blackwell B200.

While Intel claims that the Gaudi 3 will be more affordable compared especially to Nvidia's products, its pricing will presumably be in line with the Hopper's, which is between $25,000 and $40,000 each, with entire systems priced at as much as $200,000, industry estimates have shown.

The B200 chip, meanwhile, would cost between $30,000 and $40,000, Nvidia chief executive Jensen Huang told CNBC on March 19, one day after it was unveiled. The B200 is expected to start shipping later in 2024.

The Gaudi 3 will be made available by the third quarter of 2024, with a select group of companies – Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro – getting first crack at it in the second quarter of 2024, Intel said.

A step behind?

Given that the Gaudi 3 is being matched against Nvidia's soon-to-be-older Hopper chips, it is interesting to note why the company did not choose to go directly against the newer Blackwell series. Effectively, it's a generation behind Nvidia.

However, it does make sense if you consider Intel's strategy of making generative AI available to a wider user base, and it could be a step to reel in more users as it prepares – while observing the market and competition – for its next big move.

Intel, however, may face a challenge when it comes to pricing, considering the older Hopper and newer Blackwell have similar ceilings at this point. It's unclear how much Intel is willing to reduce its price.

The fact that Hopper chips are so popular that it's getting harder to find them, with consumers looking for alternatives like those from AMD, is one advantage that might work in Intel's favour. This is in keeping with Intel's other strategy of closing the gap in the market, which it hinted at with the launch of the Gaudi 3.

Presumably, the Gaudi 3 will cost a great deal less than Nvidia's top model. If Intel plays its chips well, this might pave the way for an even greater threat to the leading player in the generative AI chip market down the road.