As the world tries to deal with a surge in AI-enhanced content and deepfake videos, amid concern about potential misuse, researchers in Abu Dhabi are working on technologies that could play a crucial role in tackling deception efforts.

“We have developed a bunch of technologies that have significantly advanced the detection and characterisation of deepfakes,” said Hao Li, associate professor of computer vision at Mohamed Bin Zayed University of Artificial Intelligence.

“It’s more than just about detecting,” said Prof Li, who is also director of the university's Metaverse Centre. “It’s about where does it come from and what was its intention,” he said.

In 2022, the university was listed as an applicant on a US patent for a "video transformer for deepfake detection", which would hypothetically consist of “a display device playing back the potential deepfake video and indicating whether the video is real or fake.”

It’s only one of many areas of MBZUAI research dedicated to the growing use of AI video implementation tools and AI content generation, Prof Li said.

“It’s becoming more and more difficult to create an undetectable deepfake,” he added.

Prof Li said the university was making strides in the realm of identifying disinformation and fake news, pointing to Preslav Nakov, a professor of natural language processing, whose research revolves around disinformation analysis.

"He's the go-to expert in fake news detection," he said.

The efforts come amid growing concern globally over the proliferation of AI-driven video manipulation tools, and applications that make it possible to create photo-realistic images using only a few sentence prompts.

Last year, there were at least 121 "AI incidents" that later prompted clarification, a 30 per cent increase from the previous year, according to cyber security firm Surfshark.

“This figure accounts for one-fifth of all documented AI incidents between 2010 and 2023, marking 2023 as the year with the highest number of incidents in the history of AI,” said Agneska Sablovskaja, a researcher at Surfshark.

Some celebrities, such as Tom Hanks, Scarlett Johansson and Emma Watson, were victims of AI-powered image generators, which made unauthorised content showing the actors promoting various products.

Even Pope Francis found himself at the centre of a viral AI-generated photo that portrayed the pontiff wearing a white, puffer jacket.

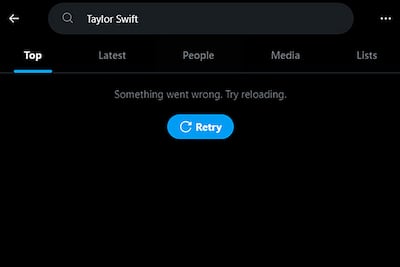

Most recently, X, formerly Twitter, had to temporarily disable searches for Taylor Swift, after various users flooded the platform with fake and nefarious AI-generated images of the pop superstar.

The images of Swift prompted a rare bipartisan effort from US senators called "the Defiance Act to hold accountable those responsible for the proliferation of nonconsensual, sexually explicit deepfake images and videos".

"Victims have lost their jobs, and they may suffer ongoing depression or anxiety," said the US senate committee on the judiciary.

"By introducing this legislation, we're giving power back to the victims, cracking down on the distribution of the deepfake images and holding those responsible for the images accountable."

It remains to be seen if the proposed legislation will become US law.

The international reach of artificial intelligence, Prof Li said, provides unique challenges for legislators and governments trying to provide guard rails for AI and deepfakes.

"It's very difficult to implement something global," he said.

"The problem is that the legal frameworks and regulations are not really catching up with the technology and how people are using the technology. Even if you punish the person [who creates the deepfake], the harm has already been done."

Prof Li however said, while there's plenty of cause for concern, deepfake detection research around the world is quickly catching up, and he remains optimistic about the benefits of AI for photos, videos and sound.

He said there were many applications, such as translation dubbing, where it could be very useful.

He gave the example of an AI-enhanced video from the 2024 World Economic Forum's annual meeting featuring a speech delivered by Argentinian President Javier Milei, where the leader's speech was dubbed into English using a tool from a company called HeyGen.

Unlike most translated videos where the voice does not match the speaker, and mouth movements do not match the sounds, the video of Mr Milei appeared to show him speaking flawless English.

The speech was viewed millions of times on various social media platforms and generated ample discussion about the potential upside of AI-enhanced video tools.

"The technology is getting very robust," said Prof Li, who is also chief and co-founder of Pinscreen, an AI company focused on the development of photorealistic virtual avatars.

"It's a great example of how you can use it [AI] for something that's not a bad thing," he added.

Similarly, other AI-video enhancement tools such as those offered by UAE-founded startup Camb.AI, have also been used to maximise the international reach of videos.

The Australian Open announced that it would be using Camb.AI's technology for the post-match interviews of tennis stars.

"Our mission is to make every sport truly global, maximising worldwide fan engagement," said Camb.ai co-founder Akshat Prakash, shortly after the company's partnership was announced with the Australian Open in January.

"Our technologies enable fans around the world to watch any sport in a language of their choice, real-time."

Throughout the tournament, a bumper crop of videos appeared on the Australian Open's YouTube page.

Novak Djokovic and Coco Gauff could be seen "speaking" fluent Spanish, while Jannik Sinner "conversed" in Mandarin.

Metaverse meets AI

Meanwhile, over at MBZUAI, Professor Li said that AI will also prove beneficial in fulfilling the ambitious visions of metaverse technologies, which are virtual spaces where people represented by avatars interact.

Last year, the university announced the MBZUAI Metaverse Centre (MMC) to spearhead development on AI-infused metaverse technologies.

"It encompasses multiple research labs," he said.

"My lab works on generative reality, we have another lab focusing on digital twins, another focused on generative music, and yet another focused on multi-modal generative AI."

AI, he said, has made it possible to fix some of the criticisms levelled against initial iterations of the metaverse, which some felt were too much like video games.

"We're able to now build something that looks like the real thing," he said, sitting in front of a computer containing seven graphics processing units and a holographic display monitor capable of showing high-fidelity deepfakes and immersive virtual worlds.

"The whole idea is to build a simulation that can create a learning experience for people in an immersive way," he said.

AI and metaverse advancements can be utilised efficiently for education purposes, he added, making it possible for language students to virtually travel to other countries and learn from virtual teachers that look real.

"These things will become possible in the next decade," he said.